Research on psychological treatments is, in the words of this journal, “scandalously under-supported” (see Nature 489, 473–474; 2012). Mental-health disorders account for more than 15% of the disease burden in developed countries, more than all forms of cancer. Yet it has been estimated that the proportion of research funds spent on mental health is as low as 7% in North America and 2% in the European Union.

Within those slender mental-health budgets, psychological treatments receive a small slice — in the United Kingdom less than 15% of the government and charity funding for mental-health research, and in the United States the share of National Institute of Mental Health funding is estimated to be similar. Further research on psychological treatments has no funding stream analogous to investment in the pharmaceutical industry.

This Cinderella status contributes to the fact that evidence-based psychological treatments, such as CBT, IPT, behaviour therapy and family therapy, have not yet fully benefitted from the range of dramatic advances in the neuroscience related to emotion, behaviour and cognition. Meanwhile, much of neuroscience is unaware of the potential of psychological treatments. Fixing this will require at least three steps.

Three steps

Uncover the mechanisms of existing psychological treatments. There is a very effective behavioural technique, for example, for phobias and anxiety disorders called exposure therapy. This protocol originated in the 1960s from the science of fear-extinction learning and involves designed experiences with feared stimuli. So an individual who fears that doorknobs are contaminated might be guided to handle doorknobs without performing their compulsive cleansing rituals. They learn that the feared stimulus (the doorknob) is not as harmful as anticipated; their fears are extinguished by the repeated presence of the conditional stimulus (the doorknobs) without safety behaviours (washing the doorknobs, for example) and without the unconditional stimulus (fatal illness, for example) that was previously signalled by touching the doorknob.

But in OCD, for instance, nearly half of the people who undergo exposure therapy do not benefit, and a significant minority relapse. One reason could be that extinction learning is fragile — vulnerable to factors such as failure to consolidate or generalize to new contexts. Increasingly, fear extinction is viewed [5] as involving inhibitory pathways from a part of the brain called the ventromedial prefrontal cortex to the amygdala, regions of the brain involved in decision-making, suggesting molecular targets for extinction learning. For example, a team led by one of us (M.G.C.), a biobehavioural clinical scientist at the University of California, Los Angeles, is investigating the drug scopolamine (usually used for motion sickness and Parkinson's disease) to augment the generalization of extinction learning in exposure therapy across contexts. Others are trialling D-cycloserine (originally used as an antibiotic to treat tuberculosis) to enhance the response to exposure therapy [6].

Another example illustrates the power of interdisciplinary research to explore cognitive mechanisms. CBT asserts that many clinical symptoms are produced and maintained by dysfunctional biases in how emotional information is selectively attended to, interpreted and then represented in memory. People who become so fearful and anxious about speaking to other people that they avoid eye contact and are unable to attend their children's school play or a job interview might notice only those people who seem to be looking at them strangely (negative attention bias), fuelling their anxiety about contact with others. A CBT therapist might ask a patient to practice attending to positive and benign faces, rather than negative ones.

In the past 15 years, researchers have discovered that computerized training can also modify cognitive biases [7]. For example, asking a patient (or a control participant) to repeatedly select the one smiling face from a crowd of frowning faces can induce a more positive attention bias. This approach enables researchers to do several things: test the degree to which a given cognitive bias produces clinical symptoms; focus on how treatments change biases; and explore ways to boost therapeutic effects.

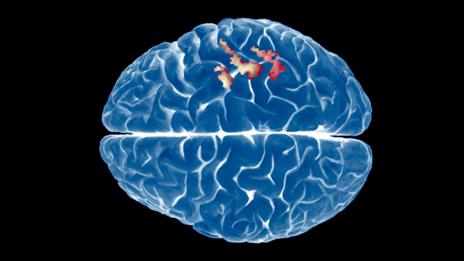

One of us (E.A.H.) has shown with colleagues that computerized cognitive bias modification alters activity in the lateral prefrontal cortex [8], part of the brain system that controls attention. Stimulating neural activity in this region electrically augments the computer training. Such game-type tools offer the possibility of scalable, 'therapist-free' therapy.

Optimize psychological treatments and generate new ones. Neuroscience is providing unprecedented information about processes that can result in, or relieve, dysfunctional behaviour. Such work is probing the flexibility of memory storage, the degree to which emotions and memories can be dissociated, and the selective neural pathways that seem to be crucial for highly specialized aspects of the emotional landscape and can be switched on and off experimentally. These advances can be translated to the clinical sphere.

For example, neuroscientists (including A.M.G.) have now used optogenetics to block [9] and produce [10] compulsive behaviour such as excessive grooming by targeting different parts of the orbitofrontal cortex. The work was inspired by clinical observations that OCD symptoms, in part, reflect an over-reaction to conditioned stimuli in the environment (the doorknobs in the earlier example). These experiments suggest that a compulsion, such as excessive grooming, can be made or broken in seconds through targeted manipulation of brain activity. Such experiments, and related work turning on and off 'normal' habits with light that manipulates individual cells (optogenetics), raise the tantalizing possibility of optimizing behavioural techniques to activate the brain circuitry in question.

Forge links between clinical and laboratory researchers. We propose an umbrella discipline of mental-health science that joins behavioural and neuroscience approaches to problems including improving psychological treatments. Many efforts are already being made, but we need to galvanize the next generation of clinical scientists and neuroscientists to interact by creating career opportunities that enable them to experience advanced methods in both.

New funding from charities, the US National Institutes of Health and the European framework Horizon 2020 should strive to maximize links between fields. A positive step was the announcement in February by the US National Institute of Mental Health that it will fund only the psychotherapy trials that seek to identify mechanisms.

Neuroscientists and clinical scientists could benefit enormously from national and international meetings. The psychological treatments conference convened by the mental-health charity MQ in London in December 2013 showed us that bringing these groups together can catalyse new ideas and opportunities for collaboration. (The editor-in-chief of this journal, Philip Campbell, is on the board of MQ.) Journals should welcome interdisciplinary efforts — their publication will make it easier for hiring committees, funders and philanthropists to appreciate the importance of such work.

What next

By the end of 2015, representatives of the leading clinical and neuroscience bodies should meet to hammer out the ten most pressing research questions for psychological treatments. This list should be disseminated to granting agencies, scientists, clinicians and the public internationally.

Mental-health charities can help by urging national funding bodies to reconsider the proportion of their investments in mental health relative to other diseases. The amount spent on research into psychological treatments needs to be commensurate with their impact. There is enormous promise here. Psychological treatments are a lifeline to so many — and could be to so many more.

References

1. Fairburn, C. G. et al. Am. J. Psychiatry 166, 311–319 (2009).

2. Hollon, S. D. et al. Arch. Gen. Psychiatry 62, 417–422 (2005).

3. Foa, E. B. et al. Am. J. Psychiatry 162, 151–161 (2005).

4. Simpson, H. B. et al. Depress. Anxiety 19, 225–233 (2004).

5. Vervliet, B., Craske, M. G. & Hermans, D. Annu. Rev. Clin. Psychol. 9, 215–248 (2013).

6. Otto, M. W. et al. Biol. Psychiatry 67, 365–370 (2010).

7. MacLeod, C. & Mathews, A. Annu. Rev. Clin. Psychol. 8, 189–217 (2012).

8. Browning, M., Holmes, E. A., Murphy, S. E., Goodwin, G. M. & Harmer, C. J. Biol. Psychiatry 67, 919–925 (2010).

9. Burguière, E., Monteiro, P., Feng, G. & Graybiel, A. M. Science 340, 1243–1246 (2013).

10. Ahmari, S. E. et al. Science 340, 1234–1239 (2013).

Affiliations

Emily A. Holmes is at the Medical Research Council Cognition & Brain Sciences Unit, Cambridge, UK, and in the Department for Clinical Neuroscience, Karolinska Institute, Stockholm, Sweden.

Michelle G. Craske is in the Department of Psychology and Department of Psychiatry and Biobehavioral Sciences, University of California, Los Angeles, USA.

Ann M. Graybiel is in the Department of Brain and Cognitive Sciences, McGovern Institute for Brain Research, Massachusetts Institute of Technology, Cambridge, Massachusetts, USA.